Learn how to optimize your WordPress robots.txt for better SEO today and understand the importance of robots.txt file to rank better on search engines.

Your site’s robots.txt file play an important role in your site’s overall SEO performance. Search engines basically communicate with it and it informs them know which parts of your site they should index.

You Need a Robots.txt File

Absence of a robots.txt file will not stop search engines from crawling and indexing your website. However, it is highly recommended that you create one. If you want to submit your site’s XML sitemap to search engines, then this is where search engines will look for your XML sitemap unless you have specified it in Google Webmaster Tools.

I highly recommend that you immediately create a robots.txt file if you do not have one on your site.

Create a Robots.txt File Easily

Robots.txt file usually resides in your site’s root folder. You will need to connect to your site using an FTP client or by using cPanel file manager to view it.

It is just like any ordinary text file, and you can open it with a plain text editor like Notepad.

If you do not have a robots.txt file in your site’s root directory, then you can always create one. All you need to do is create a new text file on your computer and save it as robots.txt. Next, simply upload it to your site’s root folder.

Or if you are using WordPress, you can download plugin – Yoast SEO. After activated, a default robots.txt file should appear on the root directory.

Video Tutorial

If you don’t like the video or need more instructions, then continue reading.

Utilize Robots.txt file

The format for robots.txt file is actually quite simple. The first line usually names a user agent. The user agent is actually the name of the search bot you are trying to communicate with. For example, Googlebot or Bingbot. You can use asterisk * to instruct all bots.

The next line follows with Allow or Disallow instructions for search engines, so they know which parts you want them to index, and which ones you don’t want indexed.

See a sample robots.txt file:

User-Agent: *

Allow: /wp-content/uploads/

Disallow: /wp-content/plugins/

Disallow: /readme.htmlDisallow:/wp-admin/

In this sample robots.txt file for WordPress, I have instructed all bots to index our image upload directory.

In the next two lines I have disallowed them to index our WordPress plugins directory and the readme.html file.

In the final line, I have also disallowed WordPress dashboard to be indexed to strengthen the security.

Optimize Robots.txt file

In the guidelines for webmasters, Google advises webmasters to not use robots.txt file to hide low quality content. If you were thinking about using robots.txt file to stop Google from indexing your category, date, and other archive pages, then that may not be a wise choice.

Remember, the purpose of robots.txt is to instruct bots what to do with the content they crawl on your site. It does not stop bots from crawling your website.

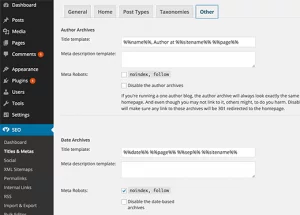

There are other WordPress plugins which allow you to add meta tags like nofollow and noindex in your archive pages. WordPress SEO plugin also allows you to do this. We are not saying that you should have your archive pages deindexed, but if you wanted to do it, then that’s the proper way of doing it.

You do not need to add your WordPress login page, admin directory, or registration page to robots.txt because login and registration pages have noindex tag added as meta tag by WordPress.

It is recommend that you disallow readme.html file in your robots.txt file. This readme file can be used by someone who is trying to figure out which version of WordPress you are using. If this was an individual, then they can easily access the file by simply browsing to it.

On the other hand if someone is running a malicious query to locate WordPress sites using a specific version, then this disallow tag can protect you from those mass attacks.

You can also disallow your WordPress plugin directory. This will strengthen your site’s security if someone is looking for a specific vulnerable plugin to exploit for a mass attack.

Conclusion

Honestly, many popular blogs use very simple robots.txt files. Their contents vary, depending on the needs of the specific site:

User-agent: *

Disallow:Sitemap: http://www.example.com/post-sitemap.xml

Sitemap: http://www.example.com/page-sitemap.xml

This robots.txt file simply tells all bots to index all content and provides the links to site’s XML sitemaps.